Upgrading to vSphere vCenter 4.1 Experiencies November 1, 2010

Posted by vneil in upgrade, VMware.add a comment

I’ve recently completed upgrading all our vSphere vCenter servers to 4.1 and as I came across a few issues I thought I would document them.

We had several vSphere vCenter 4.0U2 servers running on Windows 2003 R2 32 bit virtual machines and seeing as we needed to move to 64bit for 4.1 I took the opportunity to switch to Windows 2008 R2 64bit. A test vCenter server has a MS-SQL Express database on the same server and two production vCenter servers (in Linked mode) have databases on separate MS-SQL 2005 servers.

We built new Windows 2008R2 64bit servers in preparation and I used the datamigration tool from the vCenter distribution to perform the migration of data and install of 4.1.

Issues

MS-SQL Express database with data migration

This occurred with the MS-SQL Express migration, the datamigration tool did not backup the data as expected but also did not log any error messages during the backup procedure. The install runs fine but just creates a new empty database. This is due to a MS-SQL Express database that has been upgraded and registry settings being set wrong. It is detailed in this KB article (1024380) with the fix.

This is from the backup .log showing a good backup, if it says DB type of Custom it will not work:

[backup] Backing up vCenter Server DB...

[backup] Checking vCenter Server DB configuration...

[backup] vCenter Server DB type: bundled

Running as Administrator

As a non-Windows bod, one thing that keeps catching me out on Windows 2008R2 is that to do most things, you need to explicitly say to run them as an Administrator even if your userid is a local administrator. For example, when running the install.cmd from the datamigration tool, it will run as your normal userid but will fail fairly soon. Right click on the install.cmd and choose “Run as Adminstrator” for a simple life.

MS-SQL Express – transaction logging from bulk to simple

Another issue with the MS-SQL Express upgrade path was with the database transaction log filling up after a few days. Again, not being that au fait with MS-SQL management I found the solution to this one here in a post by Patters. It seems the upgrade process sometimes leaves the database logging mode as ‘bulk-logged‘, using the Management Studio Express tool I switched it to ‘Simple‘.

MS-SQL 2005 Server databases

I also had a similar problem with just one of the production databases on the MS-SQL 2005 server which was left in “Bulk-logged” mode after the upgrade and the DBA had to switch it back to Simple.

Sysprep files – all in one directory

As the server was a new install I also needed to repopulate the Sysprep files, following the instructions in the KB article http://kb.vmware.com/kb/1005593 . One thing I missed was to expand the deploy.cab file from the downloaded file, what I ended up doing was to make sure there were no sub directories under the relevant sysprep directory (srv2003 in this case) and put all the files from downloaded file and the files from deploy.cab in that directory.

So far we’ve done a few host upgrades of test ESXi hosts to 4.1 without problems, production yet to come.

Linux VMs and OCFS2 shared physical RDM September 27, 2010

Posted by vneil in Linux, storage, VMware.add a comment

We’ve recently had the need to setup Linux virtual machines using large shared disks with the OCFS2 cluster filesystem. To complicate matters some of these Linux servers in the cluster might run as zLinux servers on a mainframe under z/VM, all sharing the same LUNs.

I had setup a couple of test virtual machines in this scenario, the first I setup with a physical RDM of the shared LUN and enabled SCSI bus sharing on the adapter. Then for the second test virtual machine I pointed it at the mapped VMDK file of the physical RDM from the first virtual machine, this seemed to be obvious way to setup sharing of the physical RDM between VMs. This all works and both Linux servers can use the LUN and the clustered filesystem. The problem I came across is that as soon as you enable SCSI bus sharing vMotion is disabled. I wanted the ability to migrate these virtual machines if I needed to so I looked into how to achieve this.

If you only have one virtual machine and do not enable the SCSI bus sharing then vMotion is possible. The solution I came up with was to setup each virtual machine the same, ie. each to have the LUN as a physical RDM but of course once you allocated the LUN to one virtual machine it is not visible to any other VM. The easiest way to allow this to happen is with the config.vpxd.filter.rdmFilter configuration option, this is set in the vCenter settings under Administration > vCenter Server Settings > Advanced Settings and is detailed in this KB article. If the setting is not in the list of Advanced Settings it can be added.

As Duncan Epping rightly describes here it’s not really wise to leave this option set to false. What I did was to set the option to false, carefully allocate the LUN to the three Linux servers I was setting up and then set it back to true.

With the virtual machines setup like this the restriction is that they cannot run on the same host so I set anti-affinity rules to keep them apart.

It is also possible to do with via the command line without the need to set the Advanced Setting as described in this mail archive I found. I haven’t tried this method.

I don’t know if this setup is supported or not but it seems to achieve the goal we need. I’d be interested to hear any opinions on this.

VMware and SLES: a good match? September 12, 2010

Posted by vneil in Linux, VMware.1 comment so far

I’m afraid this is just a bit of a moan.I have seen a few posts lately regarding VMware’s partnership with Novell and SLES. I thought I would post my experience of running an ESXi cluster with SLES Linux virtual machines.

We started running SLES 10 on a ESXi 3.5 cluster and for the VM tools we started using the RPMs packaged from VMware. This was fine until we upgraded to ESXi 4.0 on the hosts, after that VM tools that are installed from the RPMs show up in vCenter with a status of “unmanaged“. This is as described in the documentation. We decided then to switch to using the VM tools installed from the ESXi hosts and VUM, this meant that the status returned to “OK” in vCenter and we had the benefit of being able to upgrade the virtual machines’s VMtools with VUM.

The problem started when we started upgrading our Linux servers from SLES 10 SP3 to SLES 11 SP1, it seems the VM tools distributed with ESXi and downloaded via VUM do not distribute binary kernel modules for SP1 , they have SLES 11 GA but not SP1. This is fine if you have SLES11 SP1 virtual machines with compilers and kernel sources but our production servers do not have these for obvious reasons. The upshot of this is that we have switched back to the RPM distributed tools and will have to put up with the status saying “unmanaged“.

I have checked the VM tools that come on the ESXi4.1 installation and these also do not have the binary modules for SP1.

This is quite annoying as we had a small battle with the other Unix admins to switch to using VUM to upgrade VM tools and to now have to switch back to RPMs means it will be almost impossible to ever switch back to using VUM as and when SLES11 SP1 is properly supported with VMware ESXi.

Using Hyperic to monitor vSphere August 12, 2010

Posted by vneil in monitoring, VMware.2 comments

I’ve looked at Hyperic before as our application team were already using it to monitor their JBoss applications running on Linux and they already have it configured to push alerts to our main enterprise monitoring software. I thought I could use it also to push alerts from vCenter but earlier version of Hyperic didn’t support vSphere so well or only supported host servers and not vCenter. On seeing news on the Hyperic site of a new release of Hyperic with improved vSphere support I decided to give it a test.

I signed up and downloaded the demo installer of Hyperic 4.4 which included the server and agent for Linux. I installed the in a standard SLES server and started the server. This was all quite straight forward and it also installed a copy of PostgreSQL to use as it’s database. When started the server it was accessible from a browser on port 7080. I signed in with the default user of hqadmin.

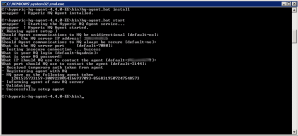

As with most monitoring software it needs agents. I installed an agent on the Windows server running VMware vSphere Server by downloading the win32 agent from the website and unpacking it on the W2003 server

Then it’s just a case of running hq-agent.bat install and hq-agent.bat start

Answer the questions, I took the defaults. When it starts it goes off and registers itself with the HQ server using the details given.

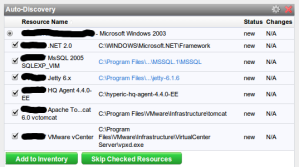

The agent should discover application details from the system it is on and appear after a refresh in the Auto-Discovery pane on the Dashboard of the HQ server. It should show VMware vCenter, select Add to Inventory.

Unfortunately there seems to be a problem with the Windows HQ agent and vSphere as mentioned here

You need to edit the agent.properties file in the conf directory of the HQ agent with the details you gave when it was installed. Then restart the agent with hq-agent restart

agent.setup.camIP=10.14.0.35

agent.setup.camPort=7080

agent.setup.camSSLPort=7443

agent.setup.camSecure=yes

agent.setup.camLogin=hqadmin

agent.setup.camPword=hqadmin

agent.setup.agentIP=10.14.0.79

agent.setup.agentPort=2144

agent.setup.resetupTokens=no

#

agent.setup.unidirectional=yes

To allow the agent to discover vSphere details, select the Resources tab -> windows server -> hostname VMware vCenter -> Inventory tab. Edit the Configurations Properties (near the bottom of the page) add a username and password that can access the vCenter sdk. After a few minutes a refresh of the Dashboard should start to show discovered ESX/ESXi hosts and VMs in the Recently Added pane.

The ESXi hosts will show up under the Resources tab under Platforms, along with your agents. Under Resources -> HQ VSphere you get a topology view of vCenter/Host/VM

There seems to be a lot of options for monitoring and alerting which I haven’t really looked into yet but it looks quite promising.

A big issue is that it doesn’t seem all that stable at the moment (it could be because this was a quick demo install and I haven’t really tuned it at all) for example once or twice I deleted one agent in the Resources tab and several resources disappeared. If an agent disappears from view it seems one way to get it back is to stop the agent and then delete the contents of the data directory and then when the agent is restarted (with hq-agent.bat start) it then registers again on the server.

The next problem I encountered was adding a second vSphere server, the view under Resources->HQ vSphere got very confused. First off both vCenter servers were listed but the host servers were under the wrong vCenter server , then one of the vCenter servers disappeared completely.

I’ll continue to test it and search forums to see if there are any solutions to these problems.

If agents are installed in the running VMs as well it seems that you can drill down from your running vSphere server, to host server, to VM and then down to applications running in that VM. This could prove quite useful in problem solving any particular issues with performance.

Too many interruptions June 7, 2010

Posted by vneil in Linux, VMware.5 comments

We had a strange problem that I noticed the other day, a few of our Linux virtual machines had High CPU alarms in vCenter. Looking into the servers themselves they were completely idle, they had been installed but no applications were running. The CPU stats in Linux showed 99% idle but the performance tab in vCenter for the virtual machine showed over 95% CPU busy. Then I looked at the interrupts per second counter from mpstat:

# mpstat 5 5 Linux 2.6.16.60-0.21-smp (xxxx) 05/31/10 CPU %user %nice %sys %steal %idle intr/s all 0.00 0.00 0.60 0.00 99.40 154596.40

A lot of interrupts (intr/s) for an idle system, compare this to a ‘normal’ busy system:

$ mpstat 5 Linux 2.6.16.60-0.21-smp (xxxx) 06/03/2010 CPU %user %nice %sys %steal %idle intr/s all 81.24 0.00 18.36 0.00 0.00 9832.73

We have around 120 Linux virtual machines and I was only seeing this on about 5 systems. Looking at the output from procinfo it easy to see the interrupts are timer interrupts:

# procinfo Linux 2.6.16.60-0.21-smp (geeko@buildhost) (gcc 4.1.2 20070115) #1 SMP Tue May 6 12:41:02 UTC 2008 1CPU [xxxxx] Memory: Total Used Free Shared Buffers Mem: 3867772 494576 3373196 0 11228 Swap: 4194296 0 4194296 Bootup: Mon May 31 14:24:38 2010 Load average: 0.00 0.16 0.18 2/152 5427 user : 0:00:19.94 2.8% page in : 1054996 disk 1: 26937r 7117w nice : 0:00:05.30 0.7% page out: 28856 disk 2: 124r 0w system: 0:00:20.78 2.9% page act: 30744 IOwait: 0:01:28.12 12.2% page dea: 0 hw irq: 0:00:01.38 0.2% page flt: 1514598 sw irq: 0:00:01.32 0.2% swap in : 0 idle : 0:09:32.81 79.6% swap out: 0 uptime: 0:11:59.66 context : 297632 irq 0: 101039309 timer irq 9: 0 acpi irq 1: 9 i8042 irq 12: 114 i8042 irq 3: 1 irq 14: 5660 ide0 irq 4: 1 irq169: 30998 ioc0 irq 6: 5 irq177: 1113 eth0 irq 8: 0 rtc irq185: 2480 eth1

I picked one and just rebooted it from the command line in Linux. After the reboot it still showed the same symptoms, a very high intr/s. Shutting down all services and daemons still didn’t help. I then shutdown and powered off the VM and then after the power on it was ok, the high interrupts per second had stopped.

We then experimented with trying a vmotion with one of these systems which had a high intr/s, this also seemed to work. “Good!” we thought a non-interruptive fix but when we tried with vmotion on another server it didn’t fix the problem. Then trying a vmotion again on the same server fixed it ?!

It seems that there is no pattern in fixing this, a cold reboot works or multiple vmotions sometimes helps. All these systems were SLES servers with SP2 running the SMP Linux kernel even though most only had one vCPU (but not all).

Definitely a strange one. I’ll keep an eye on this.