Network changes April 29, 2010

Posted by vneil in ESXi, network, scripts, VMware.add a comment

When our environment was first setup it was difficult to get all the necessary buy-in from different departments, for example, the network team.

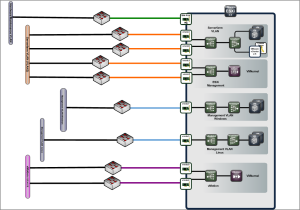

This meant doing things like VLAN tagging / trunking was not feasible, we had to provide connections to 4 different virtual machine networks so we had a lot of network connections and did not have redundancy on all. The servers had 8 network connections but to allow for ESXi / vMotion networks and the VM networks, the maintenance networks for the VMs did not get redundancy plus we had to drop one production network which wasn’t required straight away.

This meant doing things like VLAN tagging / trunking was not feasible, we had to provide connections to 4 different virtual machine networks so we had a lot of network connections and did not have redundancy on all. The servers had 8 network connections but to allow for ESXi / vMotion networks and the VM networks, the maintenance networks for the VMs did not get redundancy plus we had to drop one production network which wasn’t required straight away.

Later we organised an in-house VMware training (given by the excellent Eric Sloof) and invited a couple of the key guys from the network team who we had been working with. This had the great affect of allowing them to see how VMware handled it’s internal network and gave us all a chance to brainstorm ways of changing the network connections as we had also started getting pressure to provide connections to the mainframe production network we had left out.

The result was a much better architecture and reorganisation of how the adapters were used by VMware and incorporatation of 802.1Q VLAN tagging, proving the benefit of spreading the word and getting buy-in from other departments you deal with.

The result was a much better architecture and reorganisation of how the adapters were used by VMware and incorporatation of 802.1Q VLAN tagging, proving the benefit of spreading the word and getting buy-in from other departments you deal with.

Seeing as I had 20 ESXi servers in the cluster to change I created a script to do the definition for me. This wasn’t my own work but cobbled together from scripts from Powershell heroes like Alan Renouf and Luc Dekens.

Here is the script to create everything except the Management Network:

$esxserver= Get-VMHost esxserver4.vmware.in

$vmoip = "172.1.101.4"

# Add vmnic4,vmnci6 to vSwitch0

$vSwitch0 = get-virtualswitch -vmhost $esxserver -name vSwitch0

Set-VirtualSwitch -VirtualSwitch $vSwitch0 -Nic vmnic4,vmnic6 -NumPorts 128

# add serverfarm portgroup to vSwitch0

New-VirtualPortGroup -Name "serverfarm" -VirtualSwitch $vSwitch0

# Configure portgroup policies for vSwitch0

$hostview = $esxserver | Get-View

$ns = Get-View -Id $hostview.ConfigManager.NetworkSystem

# set failover policy for serverfarm portgroup

$pgspec = New-Object VMware.Vim.HostPortGroupSpec

$pgspec.vswitchName = "vSwitch0"

$pgspec.Name = "serverfarm"

$pgspec.Policy = New-Object VMware.Vim.HostNetworkPolicy

# create object for nic teaming in port group

$pgspec.Policy.NicTeaming = New-Object VMware.Vim.HostNicTeamingPolicy

$pgspec.Policy.NicTeaming.nicOrder = New-Object VMware.Vim.HostNicOrderPolicy

$pgspec.Policy.NicTeaming.nicOrder.activeNic = @("vmnic4","vmnic6")

$pgspec.Policy.NicTeaming.nicOrder.standbyNic = @("vmnic1")

# load balancing

$pgspec.policy.NicTeaming.policy = "loadbalance_srcid"

# link failure

$pgspec.policy.NicTeaming.failureCriteria = New-Object vmware.vim.HostNicFailureCriteria

$pgspec.policy.NicTeaming.failureCriteria.checkBeacon = $false

# Failback

$pgspec.policy.NicTeaming.RollingOrder = $false

# notify switches

$pgspec.policy.NicTeaming.notifySwitches = $true

$ns.UpdatePortGroup($pgspec.Name,$pgspec)

# set failover policy for Management Network portgroup

$pgspec = New-Object VMware.Vim.HostPortGroupSpec

$pgspec.vswitchName = "vSwitch0"

$pgspec.Name = "Management Network"

$pgspec.Policy = New-Object VMware.Vim.HostNetworkPolicy

# create object for nic teaming in port group (opposite failover to other port group)

$pgspec.Policy.NicTeaming = New-Object VMware.Vim.HostNicTeamingPolicy

$pgspec.Policy.NicTeaming.nicOrder = New-Object VMware.Vim.HostNicOrderPolicy

$pgspec.Policy.NicTeaming.nicOrder.activeNic = @("vmnic1")

$pgspec.Policy.NicTeaming.nicOrder.standbyNic = @("vmnic4","vmnic6")

# load balancing

$pgspec.policy.NicTeaming.policy = "failover_explicit"

# link failure

$pgspec.policy.NicTeaming.failureCriteria = New-Object vmware.vim.HostNicFailureCriteria

$pgspec.policy.NicTeaming.failureCriteria.checkBeacon = $false

# Failback

$pgspec.policy.NicTeaming.RollingOrder = $false

# notify switches

$pgspec.policy.NicTeaming.notifySwitches = $true

$ns.UpdatePortGroup($pgspec.Name,$pgspec)

# create vSwitch1 for mainframe

New-VirtualSwitch -VMhost $esxserver -Nic vmnic3,vmnic5 -NumPorts 128 -Name "vSwitch1"

$vSwitch1= Get-VirtualSwitch -vmhost $esxserver -Name "vSwitch1"

New-VirtualPortGroup -Name "mainframe" -VirtualSwitch $vSwitch1

# create vSwitch2 for server mgmt and vmotion

New-VirtualSwitch -VMhost $esxserver -Nic vmnic0,vmnic2 -NumPorts 128 -Name "vSwitch2"

$vSwitch1= Get-VirtualSwitch -vmhost $esxserver -Name "vSwitch2"

# create servermgmt_linux (vlan3001)

New-VirtualPortGroup -Name "servermgmt_linux" -VLanId 3001 -VirtualSwitch $vSwitch2

# create servermgmt_win (vlan3002)

New-VirtualPortGroup -Name "servermgmt_win" -VLanId 3002 -VirtualSwitch $vSwitch2

# create vmotion (vlan3099)

New-VMHostNetworkAdapter -VMHost $esxserver -PortGroup vmotion -VirtualSwitch $vSwitch2 -IP $vmoip -SubnetMask 255.255.255.0 -VMotionEnabled $true -EA Stop

$hostview = $esxserver | Get-View

$ns = Get-View -Id $hostview.ConfigManager.NetworkSystem

# set failover policy for vmotion portgroup

$pgspec = New-Object VMware.Vim.HostPortGroupSpec

$pgspec.vswitchName = "vSwitch2"

$pgspec.Name = "vmotion"

$pgspec.vlanId = "3099"

$pgspec.Policy = New-Object VMware.Vim.HostNetworkPolicy

# create object for nic teaming in port group

$pgspec.Policy.NicTeaming = New-Object VMware.Vim.HostNicTeamingPolicy

$pgspec.Policy.NicTeaming.nicOrder = New-Object VMware.Vim.HostNicOrderPolicy

$pgspec.Policy.NicTeaming.nicOrder.activeNic = @("vmnic2")

$pgspec.Policy.NicTeaming.nicOrder.standbyNic = @("vmnic0")

# load balancing

$pgspec.policy.NicTeaming.policy = "failover_explicit"

# link failure

$pgspec.policy.NicTeaming.failureCriteria = New-Object vmware.vim.HostNicFailureCriteria

$pgspec.policy.NicTeaming.failureCriteria.checkBeacon = $false

# Failback

$pgspec.policy.NicTeaming.RollingOrder = $false

# notify switches

$pgspec.policy.NicTeaming.notifySwitches = $true

$ns.UpdatePortGroup($pgspec.Name,$pgspec)

# set failover policy for servermgmt_linux portgroup

$pgspec = New-Object VMware.Vim.HostPortGroupSpec

$pgspec.vswitchName = "vSwitch2"

$pgspec.Name = "servermgmt_linux"

$pgspec.vlanId = "3001"

$pgspec.Policy = New-Object VMware.Vim.HostNetworkPolicy

# create object for nic teaming in port group

$pgspec.Policy.NicTeaming = New-Object VMware.Vim.HostNicTeamingPolicy

$pgspec.Policy.NicTeaming.nicOrder = New-Object VMware.Vim.HostNicOrderPolicy

$pgspec.Policy.NicTeaming.nicOrder.activeNic = @("vmnic0")

$pgspec.Policy.NicTeaming.nicOrder.standbyNic = @("vmnic2")

# load balancing

$pgspec.policy.NicTeaming.policy = "failover_explicit"

# link failure

$pgspec.policy.NicTeaming.failureCriteria = New-Object vmware.vim.HostNicFailureCriteria

$pgspec.policy.NicTeaming.failureCriteria.checkBeacon = $false

# Failback

$pgspec.policy.NicTeaming.RollingOrder = $false

# notify switches

$pgspec.policy.NicTeaming.notifySwitches = $true

$ns.UpdatePortGroup($pgspec.Name,$pgspec)

# set failover policy for servermgmt_win portgroup

$pgspec = New-Object VMware.Vim.HostPortGroupSpec

$pgspec.vswitchName = "vSwitch2"

$pgspec.Name = "servermgmt_win"

$pgspec.vlanId = "3002"

$pgspec.Policy = New-Object VMware.Vim.HostNetworkPolicy

# create object for nic teaming in port group

$pgspec.Policy.NicTeaming = New-Object VMware.Vim.HostNicTeamingPolicy

$pgspec.Policy.NicTeaming.nicOrder = New-Object VMware.Vim.HostNicOrderPolicy

$pgspec.Policy.NicTeaming.nicOrder.activeNic = @("vmnic0")

$pgspec.Policy.NicTeaming.nicOrder.standbyNic = @("vmnic2")

# load balancing

$pgspec.policy.NicTeaming.policy = "failover_explicit"

# link failure

$pgspec.policy.NicTeaming.failureCriteria = New-Object vmware.vim.HostNicFailureCriteria

$pgspec.policy.NicTeaming.failureCriteria.checkBeacon = $false

# Failback

$pgspec.policy.NicTeaming.RollingOrder = $false

# notify switches

$pgspec.policy.NicTeaming.notifySwitches = $true

$ns.UpdatePortGroup($pgspec.Name,$pgspec)

Upgrading to vSphere on ESXi March 22, 2010

Posted by vneil in ESXi, upgrade, VMware.2 comments

We’ve recently gone through the process of upgrading our complete VMware infrastucture to vSphere and this is a short summary of how the process went. We needed to upgrade 2 vCenter Servers, 2 Update Managers and 30 ESXi hosts servers not including any test clusters. We had been running vSphere4 on our test servers for a while and didn’t come across any problems so planned to upgrade our production environment. Luckily vSphere 4.0 Update 1 come out shortly before we were to deploy in production so after testing this on the test servers we were able to go straight to Update 1 for our production servers.

The plan was:

- Upgrade vcenter

which included the following :

- stop vCenter services

- backup vcenter db

- backup ssl certs

- snapshot vCenter VM

- upgrade vCenter Server

- Run vCenter upgrade

- Upgrade VUM

which included:

- stop VUM service

- backup VUM db

- upgrade Update Manager

- Upgrade converter/guided consolidation

- Configure new licenses in vCenter

- Configure VUM with host upgrade ISO/zip file for ESXi4.0 U1 (more on this later)

- Backup ESXi host server configurations with vicfg-cfgbackup on the vMA server

- Put host into maintenance mode and reboot into a network boot rescue Linux image to update Bios, nic firmware, fc firmware

- Reboot host and remediate host with VUM to upgrade ESXi

This was mostly planned with the upgrade guide PDF and on the whole it went very well, there were a few things which I would probably add to this plan if I were to do it again.

vCenter upgrade

This went very smoothly and we didn’t come across any big problems. The only issue was with the added roles and permissions for the datastores and networks, which meant that our Windows and Linux admins couldn’t provision new disks or add networks to VMs. This was quickly resolved by clone and adding the new roles of Datastore consumer and Network consumer for each of the VM admin groups to the folders of the respective datastores and networks which were created as part of the upgrade. This is documented in the PDF (which I must have missed) on page 65.

I really like this new feature of permissions for datastores, it means we can have much better control with giving different datastores to different VM admins.

VUM / Hardware

Since upgrading all the hosts we have started to see various strange hardware error alarms being displayed in vCenter. The errors are things like “Host Battery Status : I2C errors” or “System Memory” errors on no particular hardware device. Upon looking into it I thought this might have something to do with the updated CIM implementation and realised that we had loaded the standard ESXi4.0 U1 ISO image from the VMware site into VUM, maybe we should have used an IBM specific image as all our servers are the same xSeries models from IBM and came with ESXi installed on internal USB sticks. The errors we see, come and go on different servers which means it’s difficult to see if there are any real problems.

I opened a service call with IBM (who provide our VMware support) to ask if there was a customised version of ESXi for xSeries servers or if there were any known problems with ESXi4 on IBM hardware. After quite a bit of internal communication within IBM it seems there is an “IBM recovery CD” for ESXi but it doesn’t seem to come with any customisation apart from a message somewhere saying to report problems to IBM support rather than VMware support.

Now I am waiting for the CD to be sent just to see if it makes any difference while I also have open service calls with IBM for the individual problems we see. It seems the problem is to do with a “handshake problem between VMware and the IPMI” according to the service call and they are working on fixing the issue but it basically means we are stuck with these false alarms.

This is what we see:

Upgrading Firmware on ESXi

As ESXi does not have the Service Console it is harder to do things like upgrading the BIOS or changing settings on FC adapters. For this I setup our SLES Autoyast server to network boot the SLES Rescue image on the ESXi server. This allowed me to NFS mount a share with Linux versions of the BIOS update, Broadcom adapters firmware and the scli program to updated the Qlogic cards and check their settings.

Virtual Machine Upgrading

You may have noticed this missing from the upgrade plan, which is kind of true, all of the previous steps were down without any outage of applications or services (apart from vCenter but this is a controlled set of users). The next step of upgrading VM Tools and the virtual machine hardware will require further planning by the respective Windows and Linux admins (and follow in another post :-))

Logging in ESXi March 3, 2010

Posted by vneil in ESXi, logging, VMware.add a comment

After listening to the VMware Communities Roundtable podcast of last week I thought I would create a short post on ESXi logging. It was episode #83 which focused on ESXi and was very interesting, it was geared towards administrators who are familiar with ESX and the pros and cons of moving to ESXi.

One thing that was mentioned by the main guest VMware’s Charu, which I haven’t tried before, was to setup persistent logging for an ESXi host. As he mentioned, although there is logging in ESXi which gets stored in the /var/log/ directory it is all in memory so if the host crashes, all logs are lost. While I already had the remote syslog setup it seems this local logging is a different log file.

On one of our test servers I went into Configuration->Advanced Settings (Software) -> Syslog -> Local and entered a directory and filename on a VMFS datastore and it started logging immediately to the specified file.

I then tried to see if it was a copy of a log that was already being created somewhere on the host and while it looks similar to the /var/log/vmware/hostd.log file it seems to be more verbose.

The other logs that I am aware of that are useful are:

/var/log/messages

/var/log/vmware/hostd.log

/var/log/vmware/vpx/vpxa.log

These are available via the browser interface (http://hostname/host). The messages file is what is sent via to the remote syslog if configured. There are also logs in /var/log/vmware/aam/that relate to the HA agent on the host and if there problems with a host enabling the agent it is sometimes fruitful to look in here at the latest files.

I would say that setting up both local persistent and remote syslog logging as a very good recommendation in configuring ESXi hosts especially in a production environment.

Setting preferred paths in ESXi February 12, 2010

Posted by vneil in ESXi, scripts, VMware.1 comment so far

Quite a while ago, when I was setting up our current production environment, I had to think about how to set up the multipathing for the SAN LUNs. We have an FC SAN fabric connected to a couple of HDS storage systems and each of our ESXi host servers has 2 dual port FC HBAs. All LUNs would be visible on all four HBAs so each LUN ends up with 4 paths.

To make this simple when setting up the cluster we went for a standard 500GB size for the LUNs and had about 8 LUNs allocated to start with. We needed to somehow level the usage of these LUNs across the 4 paths.

These servers were running ESXi 3.5 and the round robin path policy was still experimental this left a decision of either Fixed or Most Recently Used (MRU) as the multipath policy to use. After some investigation I chose to use Fixed multipath as with MRU you could possibly end up with all LUNs on one path if several path failures go unnoticed.

To simplify the spreading of LUNs over the multiple paths the LUNs were each allocated a path in order, for example LUN 1 to path 1 , LUN 2 to path 2 ..etc and then LUN 5 would go to path 1 again. Then of course this needed to be set on 20 ESXi servers in the cluster and if any new LUNs were added this would have to be set as well.

Nowadays I would maybe look at doing this with Powershell but back then I was still quite new to it and as I was more comfortable in Unix I used the Vima appliance and setup a simple shell script and the vicfg-mpath command to do the work for me.

Here is the script (click on view source for a better view):

#!/bin/bash

#

# Script to set preferred paths in rotational method

# Set VI_USERNAME and VI_PASSWORD environment variables before running.

#

esxhosts="esxhost1 esxhost2 esxhost3 esxhost4 esxhost5 esxhost6 esxhost7 esxhost8"

echo "===================== Start `date` =========================="

for esxhost in $esxhosts

do

LUNs=`vicfg-mpath --server $esxhost -b | grep "vmfs/devices/disks" | cut -d\ -f1 | sort -u`

if [ -z "$LUNs" ] ; then

echo "Error getting luns/paths for $esxhost"

else

echo "Setting preferred paths for $esxhost "

for LUN in $LUNs

do

lunid=$( echo $LUN | cut -d: -f3)

pathToSet=$(( $(($lunid % 4)) + 2 ))

preferredPath=$(echo $LUN | sed "s/vmhba./vmhba${pathToSet}/")

# #debug# echo Lun = $LUN lunid = $lunid path = $pathToSet preferredPath = $preferredPath

echo Executing vicfg-mpath --server ${esxhost} --policy fixed --lun ${LUN} --path ${preferredPath} --preferred

vicfg-mpath --server ${esxhost} --policy fixed --lun ${LUN} --path ${preferredPath} --preferred

done

fi

echo "----------------------------------------------------------------------------------------"

done

Getting started February 11, 2010

Posted by vneil in ESX, ESXi, Virtualisation, VMware.1 comment so far

As I mention in the about page I have decided to start this blog to try and give a little bit back to the community and post some of my experiences with working in virtualisation.

The idea when I thought about starting this blog was to add more information about ESXi to the VMware blogging arena as most of the information I read was about ESX. Unfortunately since I decided to create this blog (it’s taken me a few weeks to get going) VMware have beaten me to it and they’ve started a major push to promote ESXi and seem to be selling it as an upgrade to move from ESX to ESXi, which I find a strange choice of words. I guess they could mean to upgrade from ESX3.5 to ESXi4.0 because otherwise it would just be a change of install base and methods, not an upgrade. VMware also added an ESXi contest to their vSphere Blog which has meant a sudden glut of ESXi blog entries popping up, it seems my timing for starting an ESXi focused blog was a little off.

I won’t be down hearted , I will continue with my mission and help promote ESXi and detail some of the experience we have had running it in our 2 production clusters which consist of a 10 and a 20 node cluster.